Welcome to our new series on evaluation, where we delve into the world of assessing program impact, measuring success, and driving continuous improvement. In this series, we will explore various aspects of evaluation, ranging from different evaluation methodologies to best practices in data collection and analysis. Through this series, you will gain insights into the methodologies and techniques that underpin effective program evaluation and be better equipped to make informed decisions that enhance the effectiveness and impact of your programs.

Welcome to our new series on evaluation, where we delve into the world of assessing program impact, measuring success, and driving continuous improvement. In this series, we will explore various aspects of evaluation, ranging from different evaluation methodologies to best practices in data collection and analysis. Through this series, you will gain insights into the methodologies and techniques that underpin effective program evaluation and be better equipped to make informed decisions that enhance the effectiveness and impact of your programs.

Program evaluation can unfold in many different ways, but it typically includes five key phases: planning, data collection, data analysis, interpretation and reporting, and evaluation utilization. We will kick off this series exploring the planning phase of an evaluation.

During the planning phase, the Goldstream Group works closely with clients to clarify an evaluation’s purpose, objectives, and questions. In turn, this information guides the evaluation design, including the selection of appropriate evaluation methods and data collection instruments. We believe that engaging program staff in the evaluation planning process from the earliest stages fosters inclusivity, transparency, and shared ownership, leading to more meaningful and relevant evaluations and increased utilization of evaluation findings.

For example, one program Goldstream Group evaluated was a STEM summer camp. The program staff was specifically interested in measuring whether their summer camp contributed to participants’ STEM career interest, self-efficacy, and knowledge. Reflecting their interest in knowing about the changes in their participants’ perceptions and knowledge, we selected an outcomes-based evaluation design. An outcomes-based approach to program evaluation focuses on measuring the outcomes or results of a program rather than the program’s activities. It is a systematic way of assessing the extent to which a program has achieved its intended outcomes, and it helps stakeholders understand the effectiveness and impact of the program (Fitzpatrick, Sanders, and Worthen, 2004). The evaluation found that the camp participants did increase their STEM career interest, self-efficacy, and knowledge and this information helped to inform the development of subsequent informal science projects.

In another example, Goldstream Group evaluated a curriculum development program in which the staff used an innovative approach for developing K-12 science curriculum. The program staff were specifically interested in understanding the strengths and weaknesses of their new model. In this case, we focused on documenting and describing how each part of the curriculum development model was implemented, which is an example of a descriptive evaluation design. This information guided the program staff in making evidence-based decisions about their curriculum development model and contributed to their subsequent implementation of the model state-wide.

In our next issue, we will share information about the tools evaluators use to collect data, including surveys, interviews, observations, document review, and secondary data review.

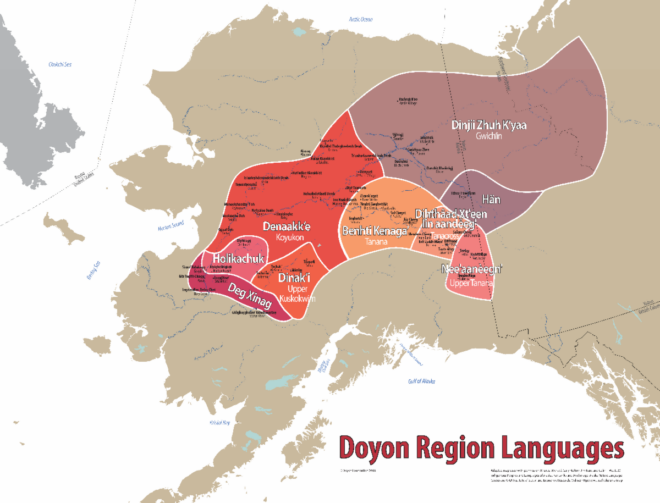

The Doyon Foundation, an Alaska Native tribal organization whose mission is to provide educational, career, and cultural opportunities to enhance the quality of life for Doyon shareholders, received a three-year grant from the Alaska Native Education Program to implement

The Doyon Foundation, an Alaska Native tribal organization whose mission is to provide educational, career, and cultural opportunities to enhance the quality of life for Doyon shareholders, received a three-year grant from the Alaska Native Education Program to implement

The Goldstream Group is excited to partner with the

The Goldstream Group is excited to partner with the